The new system can teach a group of AI agents to cooperate or compete to find the optimal long-term solution

MIT researchers have developed a technique that allows artificial intelligence agents to think further into the future, which could improve the long-term performance of cooperative or competitive AI agents. Credit: Jose-Luis Olivares, MIT, with MidJourney

Imagine two teams playing against each other on a soccer field. Players can cooperate to achieve goals and compete with other players with conflicting interests. That’s how the game works.

Artificial intelligence creation authorized dealer can learn to compete and cooperate effectively as humans remains a conundrum. A key challenge is allowing AI agents to predict the future behaviors of other agents when they are all learning simultaneously.

Due to the complexity of this issue, current approaches tend to be short-sighted; agents can only guess a few of their teammates or opponents’ next moves, which leads to poor performance in the long term.

Researchers from MIT, the MIT-IBM Watson Artificial Intelligence Laboratory, and elsewhere have developed a new method of giving AI agents the foresight. Their machine learning framework allows AI agents to collaborate or compete to see what other agents will do as time approaches infinity, not just over the next few steps. The agents then adjust their behavior accordingly to influence the future behaviors of other agents and arrive at an optimal, lasting solution.

The framework could be used by a group of autonomous drones working together to search for a hiker lost in the jungle, or by self-driving cars trying to keep them safe. passengers by predicting the future movements of other vehicles driving on a busy highway.

“When AI agents are cooperating or competing, what matters most is when their behaviors converge at some point in the future. There are a lot of transient behaviors along the way that don’t matter. a lot in the long run. Achieving this convergence behavior Dong-Ki Kim, a graduate student at MIT’s Laboratory of Decision and Information Systems (LIDS) and lead author of the paper describing the framework.

More agents, more problems

The researchers focused on a problem known as multi-agent reinforcement learning. Reinforcement learning is a form of machine learning in which an AI agent learns by trial and error. The researchers give the agent rewards for “good” behaviors that help it achieve its goals. The agent adjusts its behavior to maximize that reward until it eventually becomes an expert at a task.

But as multiple cooperative or competitive agents are learning simultaneously, things become increasingly complicated. As agents further consider the future steps of their fellow agents and how their own behavior affects others, the problem will soon require too much computing power to effectively solve. fruit. This is why other methods focus only on the short term.

“The AIs really want to think about the end of the game, but they don’t know when the game will end. They need to think about how to keep adjusting their behavior to infinity in order to be able to win a game. far in the future. Our paper essentially proposes a new goal of allowing AI to think about infinity,” Kim said.

But since it is not possible to plug infinitely into an algorithm, the researchers designed their system so that agents focus on a point in the future where their behavior will converge with that of the agents. otherwise, is called the equilibrium state. An equilibrium determines the long-run performance of agents, and multiple equilibria can exist in a multi-agent scenario.

Thus, an effective agent positively influences the future behaviors of other agents in such a way that they achieve the desired equilibrium from the agent’s point of view. If all the factors influence each other, they will converge into a common concept that researchers call “positive balance”.

The machine learning framework they developed, called FURHER (short for FUlly Reinforcing active influence with averageE Reward), allows agents to learn how to adjust their behavior as they interact with other agents to achieve this positive equilibrium.

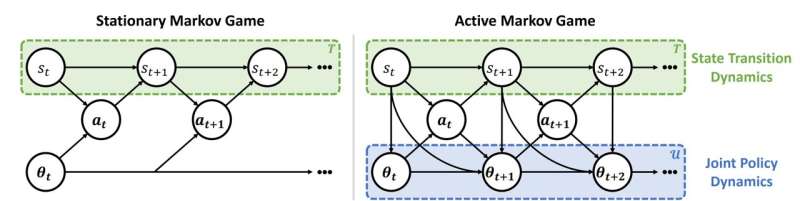

In a fixed Markov game setting, agents falsely assume that other agents will have fixed policies in the future. In contrast, agents in the active Markov game recognize that other agents have non-permanent policies based on the Markovian Credit update functions: arXiv (2022). DOI: 10.48550/arxiv.2203.03535

FURHER does this using two machine learning modules. The first module, the inference module, allows the agent to guess Future the behavior of other agents, and the learning algorithms they use, based solely on their previous actions.

This information is fed into a reinforcement learning module that the agent uses to tailor its behavior and influence other agents in a way that maximizes its reward.

“The challenge was to think about infinity. We had to use a lot of different mathematical tools to enable that and make some assumptions for it to work in practice,” Kim said.

Winning in the long run

They tested their approach with other multi-agent agents reinforcement learning frames in a number of different scenarios, including a pair of sumo-style fighting robots and a battle between two teams of 25 agents against each other. In both cases, AI agents using FURHER won the game more often.

Since their approach is decentralized, meaning that agents learn to win the game independently, it is also more scalable than other methods that require a central computer to control. agents, Kim explained.

The researchers used games to test their approach, but FURHER can be used to solve any type of multi-agent problem. It can, for example, be applied by economists seeking to develop sound policy in situations where many interacting powers have changing behaviors and interests over time.

Economics is an application that Kim was particularly excited to learn. He also wants to delve deeper into the concept of positive balance and continue to advance the FURHER framework.

Research papers are available on arXiv.

Dong-Ki Kim et al, Effects on long-term behavior in multi-agent reinforcement learning, arXiv (2022). DOI: 10.48550/arxiv.2203.03535

Provided by

Massachusetts Institute of Technology

This story is reprinted courtesy of MIT News (web.mit.edu/newsoffice/), a popular website that covers MIT research, innovation, and teaching.

quote: New system that can teach a group of AI agents to cooperate or compete to find the optimal long-term solution (2022, 23 Nov) retrieved 24 Nov 2022 from https://techxplore.com /news/2022-11-group-cooperative-competition-ai-agents.html

This document is the subject for the collection of authors. Other than any fair dealing for private learning or research purposes, no part may be reproduced without written permission. The content provided is for informational purposes only.