Google Gemini Live Demo Failed Twice During Pixel 9 Event—Reminding Us Why You Can’t Always Trust AI

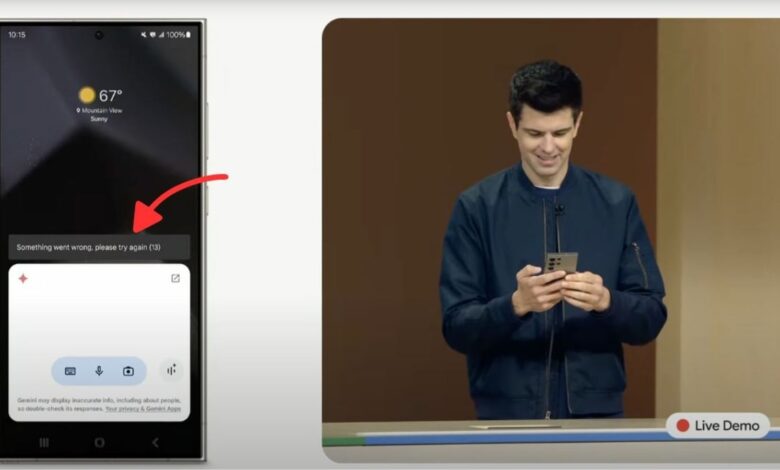

Google Pixel 9, Pixel 9 Pro Launched: Google Has Launched Pixel 9 range of devices yesterday at the Made by Google event. However, before the launch of the new product Pixel 9 ProPixel 9 and Pixel 9 Pro Fold, Google has shown off new products Gemini feature through a live demo on stage. But, fortunately, the demo failed—not once, but twice. This happened when trying to showcase how well the new Gemini mobile AI features work with the calendar app by providing information through images. However, after the reminder was sent via voice, after a few seconds of processing, Gemini became unresponsive. This happened twice, and it only worked when the device was turned to a Samsung Galaxy S24 Ultra.

Read more: Google “digs” Apple Intelligence at Pixel 9 launch event, promotes Gemini AI features

Why You Can’t Always Trust AI

As it stands, the aforementioned incident isn’t the only time Gemini has gone awry. In fact, during a demonstration of the new Magic Editor feature—Google’s attempt to expand on Magic Eraser—the AI created a strange object in the second photo after attempting to manipulate an image by adding a hot air balloon. This shows that generative AI, at least in its current form, isn’t always reliable.

Furthermore, companies like Google have acknowledged this fact. In February, Google stated that Gemini is a creative and productivity tool, and that it “may not always be reliable, especially when generating images or text about current events, developing news, or hot topics. It will make mistakes.” Google attributed this to “illusions” in its large Language Models and that there are cases where the AI will get it wrong.

Read more: Google Launches Siri Rival Gemini Live for Android: What It Is and How It Works

Google Gemini’s History of Missteps

If you recall, earlier this year, the Google Gemini app (formerly Bard) introduced the ability to generate images, but Google had to pull the feature after it generated inaccurate and sometimes offensive images.

Google’s Prabhakar Raghavan later admitted this in a blog postAs a result, Google had to temporarily disable this feature to avoid annoying people and causing further damage.

If you think about it, it’s not just limited to Google Gemini; other big AI companies, like OpenAIalso admitted that their AI models can “occasionally” provide inaccurate information. And the truth is, things like this are to be expected, given the speed at which these models are being developed in an effort to achieve Artificial General Intelligence (AGI).